While I genuinely fear the impact of disinformation and algorithmic manipulation on our society, I often do enjoy the academic elements of the debate. Today is not one of those days.

Last week, Matt Watson posted a video (included at end of newsletter) on YouTube about a "a wormhole into a soft-core pedophilia ring on YouTube."

"YouTube's recommended algorithm is facilitating pedophiles' ability to connect with each other, trade contact info, and link to actual CP [child pornography] in the comments," Watson reported. "I can consistently get access to it from vanilla, never-before-used YouTube accounts via innocuous videos in less than ten minutes, in sometimes less than five clicks."

I rant to friends regularly about algorithmic manipulation. I tweet, I write, I deleted my Facebook + Instagram, I watch Trump run our country into the ground. It’s all happening, but it takes a lot to absolutely churn my stomach and push me to a state of near-vomiting.

This did it.

OPTIMIZING PREDATION

The summary (and this WIRED piece is a well-structured overview of the problem): People will find videos of children doing things like playing Twister, doing gymnastics, eating lollipops, in swimsuits, or anything else mildly suggestive, and alongside disgusting comments, link out to actual child pornography. This is really, really bad.

But what makes this worse; the YouTube algorithm does the heavy lifting of connecting this entire horrific niche with robotic precision. The WIRED story mentions how if search for "girls” and “yoga”, the autocomplete suggestions will point you in the direction of other searches dominated by pedophiles.

This story came out 2 days ago. Advertisers are pulling out, YouTube is issuing statements…and this is still happening!!!!

WARNING:

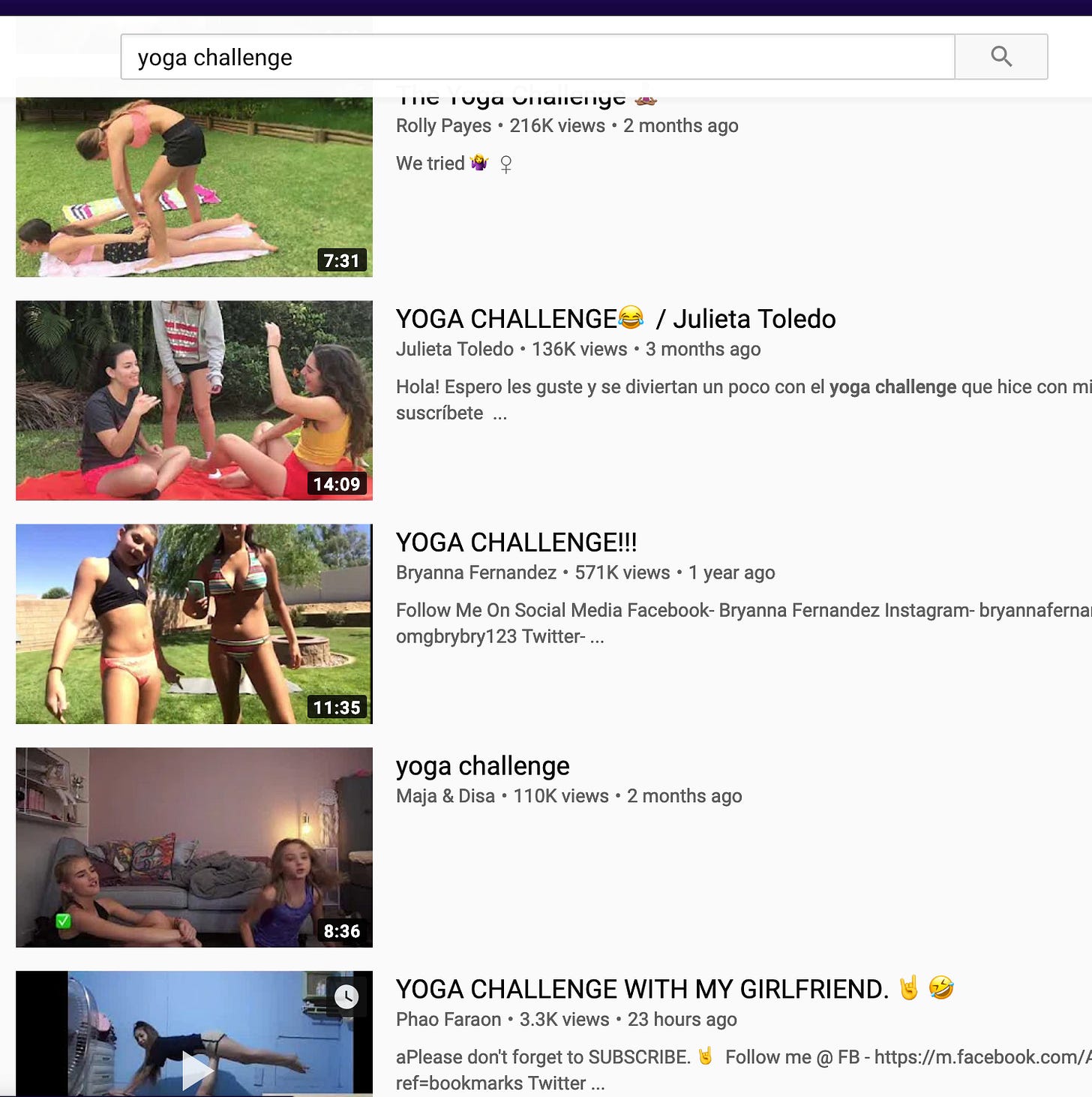

I am disgusted pasting this image here, but it’s honestly among the more innocent of the search results from one of these rabbit holes. One of the suggested terms for “girls yoga” was the innocuous sounding “yoga challenge”. Send this to every friend who works at YouTube, or Google, and remind them they are directly involved in this. This is still up after 48 hours, and just with one quick sickening visual scan, you know exactly what’s happening here:

I wish Substack allowed me to make that image smaller, because I understand if you don’t want to keep reading further, but please do. YouTube’s response says so much.

There was also a memo sent to advertisers that AdWeek has posted:

Anatomy of a Response

How many times have we heard these sentiments??

We have been doing a lot of work on the problem

We have already removed <<insert large number>> of posts

We are building new technological tools that will be deployed soon

We are asking our users to take a leadership role in stopping this

Just. Stop. It.

You cannot use the “we’ve made a lot of progress, but know there’s more work to do” excuse when it comes to pedophilia. Saying you’ve removed in the millions of videos when you brag about the billions of videos hosted in earnings calls does not make this better. But for some reason this statement from a YouTube spokeswoman to Bloomberg bothered me even more:

“Any content --including comments -- that endangers minors is abhorrent and we have clear policies prohibiting this on YouTube. We took immediate action by deleting accounts and channels, reporting illegal activity to authorities and disabling violative comments,” a spokeswoman for YouTube said in an email.

Total ad spending on the videos mentioned was less than $8,000 within the last 60 days, and YouTube plans refunds, the spokeswoman said.

The less than $8,000 figure is clearly an attempt to try to minimize the impact. But for us mere mortals who don’t deal in the scale of billions, they are telling us, “brand advertisers paid out $8,000 in ad revenue based on the clicks of pedophiles. Don’t worry we’ll refund you, but c’mon, it’s not that bad.”

It is that bad. If there was a pedophile ring at my kid’s school, and the administration told us, don’t worry, we got some of them, but there will naturally continue to be others around preying on your children. Maybe you could help us out?

Google made $30 billion in net income in 2018. I hope everyone can retain that number, $30 billion in pure profit. It’s a cash machine. If you have a pedophile problem on your platform, shut this shit down and figure it out. More than 48 hours after gloating about the millions of posts taken down, users are still actively directed by the algorithm to hidden pedo searches like “yoga challenge”.

Your algorithm is your responsibility. Not the users, not the government, not society-at-large, but you, the workers at the company that reaps billions in profits. This is not okay and the classic Zuckerbergian Dodge of “making progress, building AI tools” is not okay. Every employee at Alphabet has a responsibility to fix this and bears complicity until it is done.

***I’ve probably set off every alarm bell in Google searches, but when looking for the original YouTube video and conducting this search, what a weird set of results: